From vault to platform

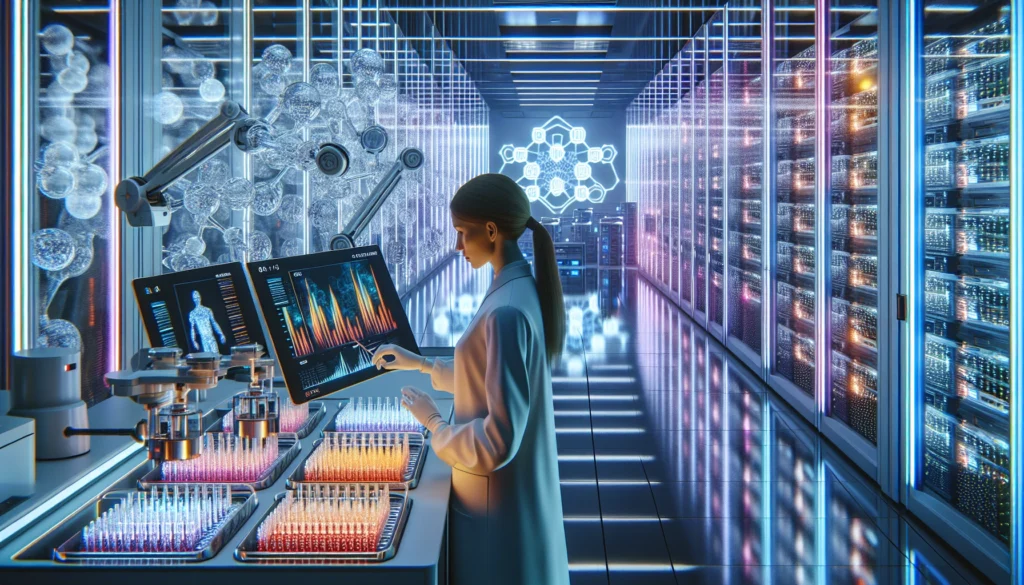

A pharma giant just did what startups dream about: it turned an expensive internal asset into a product. Surprisingly Eli Lilly is rolling out an AI platform trained on years of wet‑lab results—data it says cost north of $1 billion to generate. Instead of guarding those learnings behind closed doors, Lilly is letting select biotechs tap the models directly. That’s not a press-release flourish; it’s a structural shift in how discovery gets done.

Timing: FDA pressure meets AI

Regulators are pushing to reduce animal testing and accelerate preclinical work. Biotechs, meanwhile, need faster, cheaper shots on goal. In-silico pipelines sit right at that intersection. When you combine validated historical assays with focused machine learning, you get triage power: narrow the chemical search space, prioritize safer candidates, and save bench time for what actually matters.

What makes this different

Plenty of tools promise “AI for drugs.” However few are trained on a decade-plus of proprietary wet-lab outcomes and QC’d at pharma scale. Undeniably that matters. In life sciences, the edge isn’t the model du jour—it’s the hard-won, reproducible, real‑world data underneath. Therefore by productizing its corpus, Lilly isn’t just selling secrets; it’s selling judgment honed by thousands of experiments biotechs couldn’t afford to run themselves.

The business model hiding in plain sight

This looks like AI‑as‑a‑Drug‑Discovery‑Service. Partners get access to models plus embedded domain know‑how; Lilly gets non-dilutive economics, deal flow, and a front‑row seat to promising programs. Early collaborators like Circle Pharma (oncology) and insitro (small molecules) signal where this could go: targeted alliances where the platform accelerates design and safety hypotheses, and Lilly captures value through fees, milestones, or option rights. For founders, this flips capex into opex: rent the trained stack, conserve cash, and focus your burn on biology only you can do.

Conservative take: speed, but mind the dependencies

Completely pitched as an equalizer for smaller teams—and it is—but it’s also a consolidator. Standardized tooling can set de facto norms for feature spaces, endpoints, even go/no‑go gates. That can be good for reproducibility and regulatory dialogue, but it creates reliance on a single vendor’s assumptions. If your pipeline drive is by an external model, build redundancies: orthogonal validation assays, independent benchmarks, and clear IP contours around anything you feed in.

Risks and reality checks

No model immunizes you from bad biology. Bias in historical screens, shifts in assay protocols, or unrepresented chemistries can all mislead. Interpretability isn’t optional when regulators—and insurers later—want causal reasoning, not just correlations. And governance matters: what’s the data lineage, what’s covered by your agreements, and how are conflicts handled if your best hit overlaps with the platform owner’s interests?

Automate your tasks by building your own AI powered Workflows.

What great looks like for scrappy biotechs

Treat platforms like this as an acceleration lane, not autopilot. Define a narrow problem (e.g., series expansion for a tractable target or in silico tox gating), pre-register your evaluation plan, and track uplift versus your historical baseline: lower false positives, fewer animal studies, shorter cycles. If the tool can’t clear those bars, switch. If it does, scale deliberately—don’t let convenience blur scientific rigor.

The bigger chess move

The center of gravity in drug discovery is tilting from tools to data stewardship. Companies with validated, longitudinal lab outcomes will set the pace, and those who make that data usable—securely, transparently, and economically—will attract the best partners. Lilly just turned a cost center into a flywheel. Expect rivals to follow, and for the line between pharma and platform to get very, very thin.

Related Article

Did you enjoy this topic? Here is an article from a trusted source on the same or similar topic.

Eli Lilly launches platform for AI-enabled drug discovery

https://finance.yahoo.com/news/eli-lilly-launches-platform-ai-110909916.html

Source: Yahoo Finance (REUTERS)

Publish Date: 09/09/2025 06:40 AM CDT

AI Related Articles

- Lilly Productizes $1B of Lab Data: An On‑Demand AI Discovery Stack for Biotechs

- Microsoft’s Nebius Power Play: Why Multi‑Year GPU Contracts Are Beating the Bubble Talk

- AI Overviews Ate the Blue Link: What Smart Publishers Do Next

- The Quiet Freight Arms Race: Why U.S. Prosperity Rides on Autonomous Trucks

- AI’s Default Chatbot: ChatGPT’s 80% Grip and Copilot’s Distribution-Driven Ascent

- AI Stethoscope Doubles Diagnoses in 15 Seconds—The Hard Part Is Deployment

- AI Video Turns 20 Minutes of Reality into Deployable Humanoids

- Automated UGC, Manual ROI: The Next Ad Arbitrage

- Job Loss: Pilots Are Over, AI Agents Just Hit the Payroll—and the Pink Slips

- Build the AI Control Tower: Turn Supply Chain Chaos into Margin

- Nuclear Command at Machine Speed: The Flash Crash Risk We’re Not Pricing In

- Autonomous Interstates by 2027: The Route Changes, Not the Role

- AI Will Lower Healthcare Costs by Deleting Admin Waste—Not by Finding More Diagnoses

- Microsoft Warns About AI Job Risk—But It’s Your Task List That’s Replaceable

- Stop Shipping AI Demos: Win With AgentOps, Not Agent Model Hype