Recommended Books

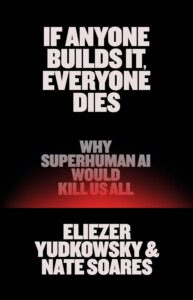

If Anyone Builds It, Everyone Dies: Why Superhuman AI Would Kill Us All by Eliezer Yudkowsky (with contributions from Nate Soares) arrives September 16, 2025 as a clarion call from two of the AI safety field’s early researchers. Building on the 2023 open letter that warned of extinction-level risk from advanced AI, the authors distill decades of work into a clear, forceful case: sufficiently smart systems will develop goals that diverge from ours—and in any contest of control, humanity would lose. Tim Urban calls it “the most important book of our time,” capturing the urgency and clarity of the argument.

Automate your tasks by building your own AI powered Workflows.

Listen or Add to your Collection

Yudkowsky and Soares walk readers through the theory, evidence, and a concrete extinction scenario, then outline what survival would actually require—from alignment to governance—without hand-waving or false comfort. Anchored by no-nonsense explanations (praised by Yishan Wong as the best simple account of AI risk), this is essential reading for technologists, policymakers, leaders, and curious citizens who want to understand the stakes and act wisely. Rigorous, accessible, and urgent, it’s the definitive case for rethinking the AI race before it’s too late.

PURCHASE FROM AMAZON

AI Related Articles

- AI Stethoscope Doubles Diagnoses in 15 Seconds—The Hard Part Is Deployment

- AI Video Turns 20 Minutes of Reality into Deployable Humanoids

- Automated UGC, Manual ROI: The Next Ad Arbitrage

- Job Loss: Pilots Are Over, AI Agents Just Hit the Payroll—and the Pink Slips

- Build the AI Control Tower: Turn Supply Chain Chaos into Margin

- Nuclear Command at Machine Speed: The Flash Crash Risk We’re Not Pricing In

- Autonomous Interstates by 2027: The Route Changes, Not the Role

- AI Will Lower Healthcare Costs by Deleting Admin Waste—Not by Finding More Diagnoses

- Microsoft Warns About AI Job Risk—But It’s Your Task List That’s Replaceable

- Stop Shipping AI Demos: Win With AgentOps, Not Agent Model Hype

- Generative Ransomware Is Here—Your Security Playbook Just Changed

- The Next Breakthrough in ChatGPT Isn’t Smarts—it’s Saying “I Don’t Know”

- Stop Chasing Apps: Build a Personal AI Student OS

- Browser Copilots Are the New Surveillance Layer—Build Verifiable Trust

- AI Bots Are the New Superfans: Streaming’s Next Moat Is Verified Attention